Neural Networks

Brief table of contents

Part 1. What are neural networks and what can they do?

- Neural networks solve intellectual problems

- Types of problems that neural networks solve

- Differences between neural networks and classical machine learning

- The structure of the biological and model of artificial neurons

- Types of training

- Neural network architectures

- How does a neural network fit into Production?

- Deep learning libraries and how we will work

Part 2. How to write a neural network in Python?

- Writing code to recognize handwritten digits

- Creating and training a neural network

- Analysis of training results and recognition of data from the test set

- How to research further

What are neural networks and what can they do?

Neural networks solve intellectual problems

Let's start with the main one. The main purpose of neural networks is the solution to intellectual problems.

In order to understand what is an intellectual task and what is not, let's look at an example.

Let's say you're at a webinar. There is a presenter, there are viewers, and there is a certain service that provides the broadcast.

What kind of work does the webinar service software do?

It receives a video stream from the host's camera, compresses it according to certain rules, forwards it over the network to each of the participants, unpacks the stream, and gives it to the end viewer.

The same thing happens with the presentation that the presenter demonstrates, with the chat, and so on.

The operation of such software is a fully deterministic algorithm:

- get video stream from host's camera

- squeeze it

- transmit as packets over a specific protocol to the viewer

- decompress back to video stream

- display it to the viewer

What tasks are designed to solve neural networks? Their strength lies in solving so-called intellectual tasks, tasks of a different type, for example:

- face recognition

- chair recognition in a shopping center (distinguish KFC chairs from McDonald's)

- Tesla autopilot, etc.

These tasks are called intelligent since they do not have certain specified steps for solving them, there is no clearly defined sequence of actions, and the neural network itself is trained in the process of solving such a task.

Place of neural networks in the field of Data Mining and Machine Learning

Now let's look at the place of neural networks in the larger field of Data Mining. It is not shown in the illustration below, because is an even more general concept that includes all other components reflected in the diagram.

Data Mining is the direct work with data and their analysis by a person using his own intellectual resources.

A classic example of an application program used in the field of Data Mining is the widely used MATLAB package.

In the case of Data Mining, the person himself, as a researcher, deals with this issue and immerses himself in it. As an approximate analogy, here we can recall the famous film "A Beautiful Mind", when the main character, all overlaid with data, sat, thought, and solved a certain cipher.

Another example shows us well the difference between Data Mining and Machine Learning. (Machine learning). At a time when many were trying to decipher the codes of the German Enigma cipher machine manually, with their own heads, the English mathematician and cryptographer Alan Turing created a machine that would decipher this code itself.

And the car eventually won.

Machine Learning is the training of a machine so that it solves a certain type of problem without human intervention.

Another example is the development of credit scoring in a bank, i.e. creation of a reliable system for assessing the creditworthiness (credit risks) of a person, based on numerical statistical methods.

In many cases, this is still done manually, by human forces. Those. a person analyzes a lot of data on those who pay or do not pay on loans and tries to find those parameters that correlate with the solvency of the borrower.

This is also an example of the usual classic Data Mining.

Machine Learning, on the other hand, is a situation when we launch an algorithm for this Data Mining, according to which data is processed. And it is the human-written algorithm that already finds certain patterns that it is important for us to know about certain data.

At the same time, the algorithm, most likely, will not give us these patterns in a form that is understandable to us, but at the same time, it will have the necessary predictive power and will be able to give a fairly accurate forecast on the solvency of a potential borrower.

Two Kinds of Machine Learning

Further, it is important for us to understand that machine learning is divided into 2 large classes:

1. Classical machine learning

This is an older approach that uses methods such as the method of least squares, the method of principal components, etc.

These are mathematical methods in which there is a lot of mathematical statistics, probability theory, linear algebra, mathematical analysis, etc. These are operations with matrices, separating surfaces, etc.

In classical machine learning, human participation is very large.

For example, how was face recognition written using classical machine learning?

A special program was written that determined where the eye was, where the ear was, where the mouth was, and so on. To do this, the specialist independently conducted an analysis and created an algorithm that works according to the specified parameters.

For example, the distance between the eyes, the angle of the eye in relation to the nose, the size of the lips, the location of the ears, etc. were calculated.

Those. the person independently thought over the algorithm, highlighting many parameters and how they correlate with each other, and then the machine recognized it based on the "landmarks" that the person gave it.

2. Neural networks

Neural networks differ from classical machine learning in that they are self-learning. In the case of neural networks, a person, for example, does not write an algorithm specifically for face recognition, etc.

For example, if we wrote the classical method separately for face recognition and separately for recognition of cats and dogs, then there would be categorically different algorithms.

In one case, we would measure the distances (and other parameters) between facial features, while for cats and dogs, we would select paws, tails, body size, nose shapes, ears, etc.

The difference between a neural network is that it has a principle by which it learns, and we only need to give it a sample for training so that it learns itself.

The main thing is to choose the right network architecture(for example, to work with 2-D images), specify how many object classes we should have as output, and give the correct training sample.

As a consequence, a neural network trained to recognize faces and a neural network trained to recognize cats and dogs will be almost the same.

They will have some differences, but they will be minimal.

Deep Learning

Finally, deep learning is distinguished in neural networks.

This is a rather fashionable term, so it is important to explain its essence in order to dispel some kind of magic that often exists around it.

Let's start a little further. When a neural network is small (without hidden layers or with a minimal number of them), it is not able to describe complex phenomena and solve any significant meaningful problems, and in this respect, it can be called "stupid".

In deep neural networks, the number of layers can reach tens and even hundreds, and it has enormous power for generalizing complex phenomena and processes.

Recently, it is deep learning has become increasingly popular and in demand. more and more data is emerging, and computing power is constantly growing.

It is important to note that deep learning is not always necessary.

So, for example, if we take a neural network model to study a phenomenon, the complexity of which exceeds the complexity of the phenomenon itself, the network will retrain, i.e. memorize noises, signs that do not reflect the essence of the phenomenon under study.

In other words, the complexity of the neural network should correspond to the complexity of the phenomenon under study.

Moreover, in many cases, very simple neural networks with a couple of layers are even more accurate than very deep networks with many layers.

The reasons for this may lie in the insufficient amount of data, in the excessive complexity of the network in relation to the phenomenon under study, etc.

In general, the trend is that neural networks are increasingly replacing classical machine learning, and deep learning prevails over simple neural networks with a small number of layers

Types of problems that neural networks solve

Now let's look at the classical types of tasks that can be appropriately solved using neural networks.

We can immediately say that the types listed below cannot be considered strictly delimited - they can smoothly flow into each other.

1. Pattern recognition (classification)

Classification is a typical and one of the most common tasks, for example:

- Face recognition

- Emotion recognition

- Classification of types of cucumbers on the conveyor during sorting, etc.

The task of pattern recognition is a situation where we have a certain number of classes (from 2 or more) and the neural network must attribute the image to one category or another. This is a classification task.

2. Regression

The next type of problem is regression .

The essence of this task is to get at the output of the neural network not a class, but a specific number, for example:

- Determining the age from a photo

- Predicting the stock price

- Estimating the value of real estate

For example, we have data about a property, such as: footage, number of rooms, type of repair, development of infrastructure in the vicinity of this object, distance from the metro, etc.

And our task is to predict the price of this object, based on the available data. Tasks of such a plan are regression tasks, because at the output, we expect to get a cost - a specific number.

3. Time Series

Forecasting Time series forecasting is a task similar to regression in many ways.

Its essence lies in the fact that we have a dynamic time series of values, and we need to understand what values will go further in it.

For example, these are tasks for predicting:

- Stock prices, oil, gold, bitcoin

- Changing processes in boilers (pressure, concentration of certain substances, etc.)

- The amount of traffic on the site

- Volumes of electricity consumption, etc.

Even the Tesla autopilot can also be partly attributed to this type of task, because the video sequence should be considered as a time series of pictures.

Returning to the example of evaluating the processes occurring in boilers, the task, in fact, is twofold.

On the one hand, we predict the time series of pressure and concentration of certain substances, and on the other hand, we recognize patterns from this time series in order to assess whether the situation is critical or not. And this is where image recognition comes in.

That is why we immediately noted the fact that there is not always a clear boundary between different types of tasks.

4. Clustering

Clustering is learning when there is no teacher.

In this situation, we have a lot of data and it is not known which of them belong to which class, while there is an assumption that there are a number of classes.

For example, these are:

- Identification of classes of readers in email newsletters

- Identification of classes of images

A typical marketing task is to effectively conduct an email newsletter. Let's say we have a million email addresses, and we're doing some sort of mailing list.

Of course, people behave completely differently: someone opens almost all letters, someone opens almost nothing.

Someone constantly clicks on links in letters, and someone just reads them and clicks extremely rarely.

Someone opens letters on weekend evenings, and someone opens them on weekday mornings, and so on.

The task of clustering in this case is the analysis of the entire amount of data and the selection of several classes of subscribers with similar behavioral patterns (within each class, of course).

Another task of this type is, for example, identifying classes for images. One of the projects in our Lab is working with fantasy pictures.

If we pass these images to the neural network, then, after analyzing them, it, for example, will “tell” us that it has discovered 17 different classes.

Let's say one class is an image with locks. The other is pictures with the image of a person in the center. The third class is when there is a dragon in the picture, the fourth is when there are many characters against the background of a natural landscape, some kind of battle takes place, etc.

These are examples of typical clustering problems.

5. Generation

Generative networks (GANs) are the newest type of network that has recently emerged and is rapidly developing.

In short, their task is machine creativity. Machine creativity means the generation of any content:

- Texts (poems, lyrics, stories)

- Images (including photorealistic ones)

- Audio (voice generation, musical works), etc.

In addition, content transformation tasks can be added to this list:

- coloring black-and-white films into color

- changing the season in a video (for example, transforming the environment from winter to summer), etc.

In the case of the "alteration" of the season, the changes affect all important aspects. For example, there is a video from the registrar in which a car drives through the streets of the city in winter, there is snow around, the trees are bare, people are wearing hats, etc.

Parallel to this video is another, remade in the summer. There, instead of snow, there are green lawns, trees with foliage, people are lightly dressed, etc.

Of course, there are some other types of tasks that neural networks solve, but we have considered all the main ones.

.

Differences between neural networks and classical machine learning

Now that we have talked about the main tasks that neural networks can solve, it is worth dwelling a little more on the features that distinguish neural networks from classical machine learning.

We highlight 5 such differences:

1. Self-learning

Indirectly, we have already touched on this point above, so now we will reveal it a little more.

The essence of self-learning is that we set a general algorithm for how it will learn, and then it "understands" how a certain phenomenon or process is arranged from the inside.

The essence of self-learning lies in the fact that for successful operation, the neural network needs to be given correct, prepared data and to prescribe an algorithm by which it will be trained.

As an illustration for this feature, we can cite a genetic algorithm , the essence of which we will consider using the example of a program with emoticons flying across the monitor screen.

50 emoji were moving at the same time, and they could slow down / speed up and change their direction of movement.

In the algorithm, we have laid down that if they fly off the edge of the screen or get under a mouse click, then they "die".

After "death" a new emoticon was immediately created, moreover, based on the one who "lived" the longest.

It was a probabilistic function that prioritized the "birth" of new emoticons to those who lived the longest and minimized the likelihood of "offspring" for those who quickly flew off the screen or got hit by a mouse click.

Immediately after starting the program, all emoticons begin to fly randomly and quickly "die", flying off the edges of the screen.

Literally, 1 minute passes, and they learn not to "die", flying off the right edge of the screen, but flying down. After another minute, they stop flying down.

Emoticons were divided into several groups: someone flew along the perimeter of the screen, someone stomped in the center, someone cut into eights, etc. At the same time, we have not yet clicked them with the mouse.

Now we started clicking on them with the mouse and, of course, they immediately died, because they had not yet learned to avoid the cursor.

After 5 minutes it was impossible to catch any of them. As soon as the cursor approached them, the emoticons did some resault and left the click - it was impossible to catch them.

The most important and delightful thing about everything is that we did not put in them an algorithm for how not to fly off the edges of the screen and dodge the mouse. And if the first moment is easy to implement, then with the second everything is an order of magnitude more difficult.

It would take about a week to create something like this, but the beauty is that we achieved the same or even better result by spending a couple of hours programming and a few more minutes for the learning to happen.

In addition to this, by changing a couple of lines of code, we could add any other function to their learning algorithm. For example, if this happens on the background of the desktop - do not climb on the icons that are on it.

Or we want them not to collide with each other. Or we want to divide them into 2 groups, painted in different colors and we want them not to collide with emoticons of a different color. Etc.

And they would learn it in the same 5-10 minutes according to the same principles of genetic algorithms.

I hope this example has shown you the amazing power of everything related to artificial intelligence and neural networks.

With all this, it is important to have the competence to write such genetic algorithms and be able to supply such a network with the correct input data .

So, in the example discussed above, at first it was not possible to make any significant progress in learning emoticons for the reason that they were given the distance to the edges of the screen and the distance to the mouse cursor as input.

As a result, the neural network did not have enough power to learn from this type of data, and there was practically no progress.

After that, other values began to be passed as input parameters - one divided by the distance to the edge of the screen and one divided by the distance to the cursor.

The result is the beautiful training we just talked about. Why? Because when they flew up to the edge of the screen, this parameter began to go off scale, it changed very quickly, and in this combination, the neural network was able to identify an important pattern and learn.

That's all with self-learning, moving on.

2. Requires a training sample

Neural networks require a training sample for training. This is the main disadvantage, because. usually a lot of data needs to be collected.

For example, if we write face recognition in classical machine learning, then we don’t need a database of photos - just a few photos are enough, according to which a person himself will create a face detection algorithm, or even do it from memory, without a photo at all.

In the case of neural networks, the situation is different - we need a large base of what we want to train the neural network on. At the same time, it is important not only to assemble the base, but also to preprocess it in a certain way .

Therefore, very often the database is collected, including by freelancers or large businesses (a classic example is the collection of data by Google in order to show us captchas).

In our course, we focus on this - including in the Laboratories and during the preparation of theses. It is important to learn how to collect this data by hand, gaining valuable experience, understanding how it is done correctly, and what you need to pay attention to.

If you master it yourself, then in the future you will be able to write the correct technical specifications for contractors, getting the high-quality results you need.

3. Human understanding of the phenomenon is not required

The third point is a huge plus of neural networks.

You may not be an expert in any subject area, but being an expert in neural networks you can solve almost any problem.

Yes, expertise certainly affects the plus, because. we can preprocess the data better and possibly solve the problem faster, but in general this is not a critical factor.

If in classical machine learning, without being an expert in the subject area (for example, nuclear energy, mechanical engineering, geological processes, etc.), we will not be able to solve the problem at all, then here it is quite real.

4. Much more accurate in most tasks

The next significant advantage of neural networks is their high accuracy .

For example, the accident rate of Tesla autopilots for a run of 1 million km. about 10 times lower than the accident rate of an ordinary driver.

The accuracy of predicting medical diagnoses using MRI is also higher than that of medical specialists.

Neural networks beat people in almost any game: chess, poker, go, computer games, etc.

In a word, their accuracy is much higher in almost all tasks, which has led to their more and more active use in various areas of human life, from marketing and finance to medicine, industry and art.

5. It is not clear how they work inside

However, with all these possibilities, neural networks have the following minus - it is not clear how they work "inside".

We cannot get in there and understand how everything works there, how she “thinks”. It's all hidden in the scales, in the numbers.

More or less "seeing" what is happening inside is possible, perhaps, in convolutional networks when working with images, but even this does not allow us to get a complete picture.

A typical example of the fact that a neural network is somewhat similar to a black box is situations where, for example, there is a lawsuit during which some companies contact Yandex and ask why this particular site and not some other displayed on this position in the issue.

And Yandex cannot answer such questions, because. this decision is made by a neural network, not a well-defined algorithm that can be fully explained.

To answer such a question is a task as non-trivial as, for example, to predict the exact trajectory along which this particular metal ball, placed exactly in this particular place, will roll off this table.

Yes, we know the laws of gravity, but it is almost impossible to predict exactly how its movement will occur.

It's the same with a neural network. The principles of its work are clear, but it is not possible to understand how it will work in each specific case.

The structure of the biological

and

model of artificial neurons

The structure of a biological neuron

In order to understand how an artificial neuron works, let's start by remembering how an ordinary nerve cell functions.

It was its structure and functioning that Warren McCulloch (American neuropsychologist, neurophysiologist, theorist of artificial neural networks and one of the founders of cybernetics) and Walter Pitts (American neurolinguist, logician, and mathematician) took as the basis for the artificial neuron model back in the middle of the last century.

So what does a neuron look like?

A neuron has a body that accumulates and in some way transforms the signal that comes to it through dendrites - short processes whose function is to receive signals from other nerve cells.

Having accumulated a signal, the neuron transmits it further along the chain, to other neurons, but already along a long process - axon, which, in turn, is connected to the dendrites of other neurons, and so on.

Of course, this is an extremely primitive model, because every neuron in our brain can be connected to thousands of other neurons.

The next thing we need to pay attention to is how the signal is transmitted from the axon to the dendrites of the next neuron.

Dendrites are connected to axons not directly, but through the so-called synapses ., and when the signal reaches the end of the axon, a neurotransmitter is released in the synapse , which determines exactly how the signal will be transmitted further.

Neurotransmitters are biologically active chemicals through which an electrochemical impulse is transmitted from a nerve cell through the synaptic space between neurons, and also, for example, from neurons to muscle tissue or glandular cells.

In other words, the neurotransmitter is a kind of bridge between the axon and the many dendrites of the receiving cell.

If a lot of neurotransmitter is thrown out, then the signal will be transmitted completely or even be amplified. If it is thrown out a little, then the signal will be weakened, or completely extinguished and not transmitted to other neurons.

Thus, the formation of stable neural pathways (unique chains of connections between neurons), which are the biological basis of the learning process, depends on how much neurotransmitter is released.

All our training since childhood is based on this, and the formation of completely new connections is quite rare.

Basically, all our learning and mastering new skills is related to adjusting how much neurotransmitter is released at the points of contact between axons and dendrites.

For example, in our nervous system, a connection can be established between the concepts of "apple" and "pear". Those. as soon as we see an apple, hear the word "apple" or just think about it, an image of a pear appears in our brain through the mechanism of association, because. between these concepts we have established a connection - a neural track has been formed.

At the same time, there is most likely no such connection between the concepts of "apple" and a pillow, therefore the image of an apple does not cause us associations with a pillow - the corresponding neural connection is very weak or absent.

An interesting point, which is also worth mentioning, is that in insects, the axon and dendrite are connected directly, without the release of a neurotransmitter, so they do not learn in the way that animals and humans do.

They don't learn during their lifetime. They are born already knowing everything that they will be able to do during their life.

For example, each particular fly is not able to learn how to fly around glass - it will always beat in them, which we constantly observe.

All neural connections already exist, and the transmission strength of each signal is already adjusted in a certain way for all existing connections.

On the one hand, such inflexibility in terms of training has its drawbacks.

On the other hand, insects, as a rule, have a very high reaction rate precisely due to a different organization of nerve connections, due to the absence of mediator substances, which, being a kind of mediators, naturally slow down the process of signal transmission.

With all this, learning in insects can still occur, but only evolutionarily - from generation to generation.

Suppose, if the ability to fly around glass becomes an important factor contributing to the survival of the species as such, then in a certain number of generations all the flies in the world will learn to fly around them.

So far, this could not happen, because. the glasses themselves are relatively recent, and evolutionary learning can take tens of thousands of years.

If this does not play an important role for the preservation of the species, then this behavior model will not be acquired by them.

McCulloch-Pitts model of mathematical neuron

Now let's look at the model of artificial, mathematical McCulloch-Pitts neuron, developed by analogy with nerve cells.

Usually, when working with mathematical neurons, the following notation is used:

X - input data

W - weights

H - body of the neuron

Y - output of the neural network

Input data is the signals coming to the neuron.

Weights are equivalents of synaptic connection and neurotransmitter release, presented as numbers, including negative ones.

The weight is represented by a real number, by which the value of the signal entering the neuron will be multiplied.

In other words, the weight shows how strongly certain neurons are connected to each other - this is the coefficient of connection between them.

In the body of the neuron, a weighted sum is accumulated from the multiplication of the values of the incoming signals and weights.

In short, the process of learning a neural network is the process of changing the weights, i.e. coefficients of connection between the neurons present in it.

In the process of learning, the weights change, and if the weight is positive, then the signal in the neuron to which it comes is amplifying.

If the weight is zero, then there is no influence of one neuron on another. If the weight is negative, then the signal is extinguished in the receiving neuron.

And, finally, the output of the neural network is what we get as a result of processing the signal given to it by the neuron. This is a certain function of the weighted sum accumulated in the body of the neuron.

Activation functions

This function is called the activation function. Its task is to determine the value of the output data, the output signal.

These functions are different, for example, if we are dealing with the Heaviside function, then if the sum of more than a certain threshold has accumulated in the body of the neuron, then the output will be one. If less than the threshold - zero.

Different values are possible at the output of the neuron, but most often they try to bring them to the range from -1 to 1 or, which happens even more often, from 0 to 1.

Another example is the ReLU function (not shown in the illustration), which works differently: if the value of the weighted sum in the body of the neuron is negative, then it is converted to 0, and if it is positive, then to X.

So, if the value on the neuron was -100, then after processing by the ReLU function it will become 0. If this value is equal, for example, 15.7, then the output will be the same 15.7.

Also, sigmoid functions (logistic and hyperbolic tangent) and some others are used to process the signal from the neuron body. Usually, they are used to "smooth" the values of some quantity.

Fortunately, it is not necessary to understand the various formulas and graphs of these functions in order to write code, since they are already embedded in the libraries used to work with neural networks.

The simplest example of classifying 2 objects

Now that we have understood the general principle of operation, let's look at the simplest example illustrating the process of classifying 2 objects using a neural network.

Imagine that we need to distinguish the input vector(1,0) from vector (0,1). Let's say black from white, or white from black.

At the same time, we have at our disposal the simplest network of 2 neurons.

Let's say we give the top link weight of "+1" and the bottom link weight of "-1". Now, if we feed the vector (1,0) to the input, then we will get 1 at the output.

If we feed the vector (0,1) to the input, then we will get -1 at the output.

To simplify the example, we will assume that we use here the identical activation function, for which f(x) is equal to x itself, i.e. the function does not transform the argument in any way.

Thus, our neural network can classify 2 different objects. This is a very, very primitive example.

Note that in this example we assign the weights "+1" and "-1" ourselves, which is not entirely wrong. In fact, they are selected automatically in the process of network training.

Training a neural network is a selection of weights.

Any neural network consists of 2 components:

• Architecture

• Weights

Architecture is its structure and how it is arranged from the inside (we will talk about this a little later).

And when we feed data to the input of the neural network, we need to train it, i.e. choose the weights between neurons so that it really does what we expect from it, for example, it can distinguish photos of cats from photos of dogs.

In fact, the training of a neural network can also be called the selection of architecture in the process of research.

So, for example, if we work with genetic algorithms, then in the learning process, the neural network can change not only the weights, but also its structure.

But this is more an exception than a rule, therefore, in the general case, by training a neural network, we mean the process of selecting weights.

Types of training

Now that we have talked about the general learning mechanism of a neural network, let's look at three types of learning.

1. With a teacher

Learning with a teacher is one of the most frequent tasks. In this case, we have a sample, and we know the correct answers from it.

We know for sure in advance that in these 10 thousand photos there are dogs, and in these 10 thousand photos there are cats.

Or we know exactly the price of some shares for a long time.

Let's say that 5 days before this day there were such prices, and the next day the price became such and such. Then we move forward a day, and again we know: 5 days the prices were like this, and the next day the price became such and such, and so on.

Or we know the characteristics of houses and their real prices, if we are talking about real estate price forecasting.

These are all examples of supervised learning.

2. Unsupervised

The second type of learning is learning without a teacher (clustering).

In this case, we feed some data to the network input and assume that several classes can be distinguished in them.

Example: We have a large database of email addresses of people we interact with.

They open our emails, read them, click or don't follow links. They do it at certain times of the day, and so on.

Having this information (for example, thanks to the mailer's statistics), we can pass this data to the neural network with a "request" to select several classes of readers that will be similar to each other according to one or another criteria.

Using this data, we would be able to build a more effective marketing strategy for interacting with each of these groups of people.

Unsupervised learning is used in cases where it is required to detect internal relationships, dependencies and patterns that exist between objects, but we do not know in advance what exactly these patterns are.

3. Reinforcement

The third type of learning is reinforcement learning . It is a bit like learning with a teacher when there is a ready base of correct answers. The difference is that in this case, the neural network does not have the entire base at once, and additional data comes in during the training process and gives the network feedback on whether the goal has been achieved or not.

A simple example: a bot goes through a certain maze and at the 59th step receives information that it “fell into a hole” or after 100 steps allocated to exit the maze, it learns that it “did not reach the exit”.

Thus, the neural network understands that the sequence of its actions did not lead to the desired result and learns by correcting its actions.

Those. the network does not know in advance what is true and what is not, but receives feedback when an event occurs. Almost all games are built on this principle, as well as various agents and bots.

By the way, the dying emoji example we looked at above is also reinforcement learning.

In addition, these are autopilots, the selection of style according to the wishes of a particular person, based on his previous choices, etc.

For example, a neural network generates 20 pictures and a person chooses 3 of them that he likes the most. Then the neural network creates 20 more pictures and the person again chooses what he likes the most, and so on.

Thus, the network reveals a person's preferences in terms of image style, color scheme and other characteristics, and can create images for the tastes of each individual person.

Neural network architectures

One of the key tasks in creating neural networks is the choice of its architecture. It is produced by the developer based on the task ahead of him and, in the general case, the neural network is trained only by changing the weights.

An exception is genetic algorithms, when a population of neural networks in the learning process independently rebuilds itself and its architecture as well.

However, in the vast majority of cases, the architecture of the neural network does not spontaneously change during the learning process.

All changes to it are made by the developer himself through adding or removing layers, increasing or decreasing the number of neurons on each layer, etc.

Let's look at the main architectures and start with a classic example - a fully connected neural network direct distribution.

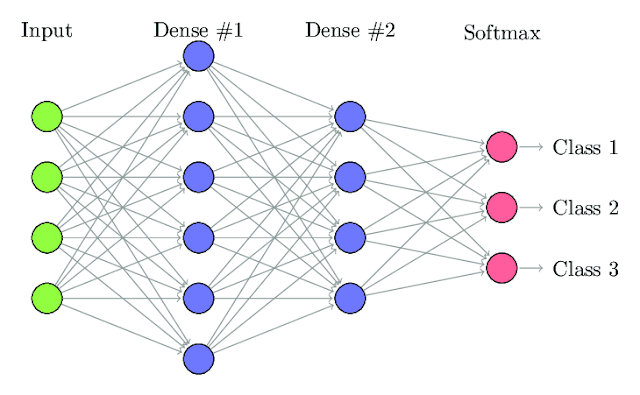

1. Fully connected neural network

A fully connected feed-forward neural network is a network in which each neuron is connected to all other neurons in neighboring layers, and in which all connections are directed strictly from input neurons to output neurons.

On the left in the figure, we see the input layer to which the signal arrives. To the right are two hidden layers, and the rightmost layer of the two neurons is the output layer.

This network can solve the classification problem if we need to distinguish any two classes - let's say cats and dogs.

For example, the upper of these two neurons are responsible for the "dog" class and can have values of 0 or 1. The lower one is for the "cat" class and can take on the same values - 0 or 1.

Another option - it can, for example, predict 2 parameters - pressure and temperature in the boiler based on 3 certain input data.

To better understand how this works, let's look at how such a fully connected network can be used for, say, weather forecasting.

In this example, we feed in temperature data for the last three days, and as an output we want to get predictions for the next two days.

Between the input and output layers, we have 2 hidden layers where the neural network will generalize the data.

For example, in the first hidden layer, one neuron will be responsible for such a simple concept as "the temperature is rising."

Another neuron will be responsible for the concept of "temperature drops".

The third - for the concept of "temperature is unchanged."

Fourth - "the temperature is very high."

Fifth - "temperature is high."

Sixth - "normal temperature."

The seventh is "low temperature".

Eighth - "very low."

Ninth - "the temperature jumped down and returned."

Etc.

Of course, we call all this in words that we understand, while the network itself, in the learning process, will make sure that each of the neurons will be responsible for something in order to effectively generalize the data.

And each of the neurons of the first hidden layer (including the one circled in the picture) will be responsible for highlighting a certain feature in the incoming data.

For example, if it is responsible for the concept of “temperature is rising”, then a neuron with a temperature of +17 from the input layer will enter it with a positive weight.

A neuron with a temperature of +15 has a zero weight, and a neuron with a temperature of +12 has a negative weight.

If this one is so, i.e. the temperature really grew and such data were submitted, the neuron circled in the figure will be activated. If the temperature drops, it will remain inactive.

The neurons of the second hidden layer (for example, circled in the picture below) will be responsible for higher -level signs , for example, that it is autumn. Or because it's any other time of the year.

The activation of this neuron will be positively affected by the fall in temperature, characteristic of autumn.

If a gradual increase in temperature is observed, then, most likely, this will statistically influence negatively, i.e. will not activate this neuron.

It is clear that there are situations when the temperature rises in autumn, but, most likely, statistically, a temperature increase over 3 days will negatively affect this neuron.

The fact that the temperature is too high will definitely have a negative effect on the neuron responsible for autumn, because. there are no very high temperatures at this time of year, and so on.

Finally, hidden layer 2 neurons are connected to two output neurons that produce temperature predictions.

At the same time, note that we ourselves do not set what each of the neurons will be responsible for (this would be an example of classical machine learning). The neural network itself will make the neurons "responsible" for certain concepts.

Moreover, within the framework of the neural network, the concepts familiar to us, such as "spring", "autumn", "temperature drops", etc. does not exist. We simply labeled them this way for our convenience, so that we can understand what is approximately happening inside during training.

In reality, the neural network itself, in the learning process, will highlight exactly those properties that are needed to solve this particular problem.

It is important to understand that a neural network with just such an architecture will not correctly predict the temperature - this is a simplified example showing how various features can be extracted inside the network (the so-called feature extraction process).

The process of feature extraction is well illustrated by the work of convolutional neural networks.

Suppose we feed photos of cats and dogs to the network input, and the network begins to analyze them.

On the first layers, the network determines the most common features: a line, a circle, a dark spot, an angle close to a straight line, a bright spot against a dark background, and so on.

On the next layer, the network will be able to extract other features: ear, paw, head, nose, tail, etc.

Even further, on the next layer, there will be an analysis of such a plan:

- here the tail is bent up, this correlates with the husky, which means a dog;

- and here are short legs, and cats do not have short legs, therefore, most likely, a dog, a dachshund;

- and here is a triangular ear with a white tip, similar to such and such a breed of cat, etc.

Approximately in this way the feature extraction process takes place.

Of course, the more neurons in the network, the more detailed, specific properties it can highlight.

If there are few neurons, it will be able to work only in “large strokes”, because it does not have enough power to analyze all possible combinations of features.

Thus, the general principle of highlighting properties is an increasing generalization in the transition from one layer to another, from a low level to an ever higher one.

So, let's remember again what a neural network is.

First , it's architecture.

It is set by the developer, i.e. we ourselves determine how many layers the network has, how they are connected, how many neurons are on each layer, whether this network has memory (more on that later), what activation functions it has, etc.

The network architecture can be represented, for example, as a regular JSON , YAML or XML file (text files that can fully reflect its structure).

Secondly , these are trained weights.

The neural network weights are stored in a collection of matricesin the form of real numbers, the number of which (numbers) is equal to the total number of connections between neurons in the network itself.

The number of matrices themselves depends on the number of layers and how the neurons of different layers are connected to each other.

Weights are given at the beginning of training (usually randomly) and are selected during training.

Thus, the network can be easily saved by saving its architecture and weights, and later reuse it by referring to files with architecture and weights.

Training a fully connected network using the method of error backpropagation (gradient descent)

How is a fully connected feedforward neural network trained? Let's look at this question using the example of the backpropagation method , which is a modification of the classical gradient descent method .

This is a method of updating the weights of a neural network, in which the propagation of error signals occurs from the outputs of the network to its inputs, in the opposite direction to the forward propagation of signals in normal operation.

We note right away that this is not the most advanced algorithm, and when working with the Keras library, we will use more efficient solutions.

It is also important to understand that in other types of networks, learning occurs according to other algorithms, but for now it is important for us to understand the general principle.

Let's go back to the temperature prediction example and let's say that we have 2 real numbers as an output signal i.e. temperature values in degrees for 2 days.

Thus, we know the correct answers, i.e. what the actual temperature was, and we know the answers that the neural network gave us.

As a way to measure the amount of error in training the network, we will use the so-called mean squared error function .

For example, on the first day, the network predicted 10 degrees for us, but it was actually 12. About the second day, she said that it would be 11 degrees, and it was also 11 in reality.

The root-mean-square error, in this case, will be the sum of the squared differences between the predicted and actual temperatures: ((10 -12)² + (11-11)²)/2 = 2.

In the process of training a neural network, algorithms (including Back Propagation - an error backpropagation algorithm) are focused on changing the weights in such a way as to reduce this root mean square error.

If it is equal to zero, this means that the neural network is guaranteed to accurately recognize, for example, all images in the training set.

It is clear that in practice it never converges to zero, but close to zero is quite possible, and the accuracy, in this case, will be very high.

Gradient Descent Method

Let's imagine now that we have some error surface. Let's say we have a neural network with 2 weights, and we can change these weights.

Different values of these weights will correspond to different neural network errors.

Thus, by taking these values, we can create a two-dimensional surface on which we can see the ratios of the weight values W₁ and W₂ and the error values X₀-X₄ (see illustration).

Now imagine that we have not two, but a thousand weights in our neural network and different combinations of these thousands of weights correspond to different errors with the same training set. In this case, we get already a thousand-dimensional surface.

We can change a thousand real numbers and each time get a new error value.

And this thousand-dimensional surface can be compared with the surface of a crumpled blanket with its local maxima and minima (respectively, the maximum and minimum values of the function on a given set). This will be called the error surface.

So, training a neural network is moving along this thousand-dimensional surface of different weights and getting different errors. And somewhere on this surface, there is the global minimum is the combination of these thousand weights for which the error is minimal.

There can be many local minima, and in them, the error value is quite low.

Therefore, training a neural network can be thought of as a meaningful movement along this thousand-dimensional surface to achieve a minimum error at some point.

At the same time, as a rule, no one is looking for a global minimum - it is alone, and the search space is huge. That is why our goal is usually just local minima. With all this, it is worth noting that we cannot know in advance which particular minimum we have found - one of the local or global.

But how exactly does the error surface move? Let's figure it out.

The important point is that we can calculate the derivative of the error for the weights. In other words, we can mathematically calculate how the error will change if we slightly change the weight in some direction.

Thus, we can calculate where we need to shift the weights so that our error is guaranteed to decrease at the next training step.

This is how we step by step look for the necessary direction of descent along the error surface.

If we step too wide, we may overshoot the local minimum and climb higher on the error surface, which is undesirable for us.

If the step is small, then we will step down, closer to the local minimum, then recalculate the direction of movement again and take a small step again.

Yes, advanced algorithms can, for example, "climb over a hill" and find a deeper local minimum, etc., but in general, the learning algorithm is built that way.

We work with a multidimensional error surface within our weights and look for where we should move to reach a local minimum, as deep as possible.

2. Convolutional Neural Network

The next architecture is convolutional neural networks, which are overwhelmingly used for working with images (although one-dimensional convolutional networks can also be used for working with text).

They process information in much the same way as our eyes work. This is the principle of receptive fields.

The main feature of convolutional neural networks is that they do not have a connection between all the neurons of neighboring layers, as was the case in fully connected networks.

Here, each neuron of the next layer "looks" at a small piece (3 by 3 or 5 by 5 pixels) of the previous layer and generalizes information from it for further transmission.

This architecture allows you to gradually highlight different features on different layers. The first layers at the same time highlight the simplest features, for example:

- on the right - white, on the left - black

- in the center - bright, along the edges - dull

- a line at 45 degrees

- etc.

The illustration below shows a one-dimensional convolutional network in which not all neurons are connected to all, and each neuron of the next layer "looks" at two neurons of the previous layer (except for the input layer on the left).

At the same time, you can notice that on the right side, the convolutional network becomes fully connected, i.e. the two architectures are merged. Why you need it, you will find out in just a few sentences below.

The selection of features occurs gradually, layer by layer, for example line - oval - paw, which is reflected in the three illustrations below.

As mentioned above, feature extraction proceeds from low-level to high-level features, after which the highest-level features are transferred to a fully connected neural network, which already performs the final classification of images and produces a result - let's say it's a cat or a dog.

3. Recurrent neural network

Recurrent networks are networks where neurons have memory.

Such neurons do not just process the vectors given to them as input, they also “remember” what happened to them in past training iterations.

Simply put, a neuron in a recurrent network has a connection with itself, and this connection is also stored in the weight matrix.

Recurrent networks are used for language tasks when you need to remember the structure of a sentence: where is the subject, where is the predicate, where are the prepositions, etc.

4. Generative neural network

Finally, there is a generative neural network.

In a generative network, we have two neural networks: one generates images, and the second tries to distinguish these images from some reference ones.

For example, one detector network looks at fantasy pictures, and another tries to create new pictures and says: “Try to distinguish fantasy pictures from mine. ”

First, it generates pictures that the detector network can easily distinguish because they don't look like fantasy pictures.

But then the network that creates the pictures learns more and more, and the detector network can hardly distinguish the fantasy pictures that we submitted to it ourselves from those generated by the second network.

5. Architectures and types of tasks

And now that we have looked at the main architectures, let's recall the types of problems that are solved by neural networks and compare them with architectures that are suitable for solving them.

1. Pattern recognition is performed by means of fully connected, convolutional, recurrent networks.2. Language problems are most often solved with the help of recurrent networks with memory, and sometimes - with the help of one-dimensional convolutional networks.3. Audio and voice processing is fully connected, one-dimensional convolutional, sometimes recurrent networks.4. The tasks of regression and forecasting of time series are solved using fully connected and recurrent networks.5. Finally, machine creativity is generative convolutional and generative fully connected networks.

Thus, we see that standard architecture intersect and are used in tasks of different types.

We will go through all these architectures: first fully connected, then convolutional, recurrent, and generative. After that, we will already study complex combinations of architectures.

How does a neural network fit into production?

So, we have come to an important stage with you - understanding how a neural network is embedded in Production. You can feel all this directly on the course or in the Laboratory, however, let's briefly go through the main stages.

1. Data collection and preprocessing

First of all, we, of course, collect data and pre-process it to transfer it to the neural network in the right, appropriate form.

2. Research and development of neural network

At this step, we take some kind of architecture based on the existing task and get the primary results on the training and test sets.

Suppose we have a task of recognizing cats and dogs. So we take a convolutional network.

Let's try a simple network with 5 layers of 20 neurons each, and at the end - 2 fully connected layers, and train.

As a result, we get some basic results, some primary accuracy. And after that we begin to think about how it can be improved, for example:

- we create various architecture options (say, several dozen options) and collect error and accuracy statistics for each of the options

- we try different activation functions

- we improve data quality (getting rid of noisy data)

- and so on ...

As a result, by experimenting and testing our assumptions, we bring the quality of the network to the desired level.

3. Architecture and weights are loaded into the service

In the next step, we are already starting the integration directly into Production. We will also have this on the course - for example, this is a service in Python or transfer in some other way.

So we put in production, for example, in service in Python, some options for architectures and weights.

4. The production part calls the recognition service

And finally, our production part calls the service and performs recognition.

After the output in production, of course, you can continue research and gradually improve the network and replace it as it is being finalized - either architecture, weights, or both.

Let's say you trained a network on a relatively small amount of data, and after a while, you received 10 times more data. Of course, it is worth retraining the network on a larger amount of data and, quite possibly, improving the quality of its work.

To do this, we constantly compare the results of our network in terms of recognition accuracy on a test set.

At the same time, it is important to constantly remember that in the field of neural networks there are not even close deterministic answers - it is always research and invention.

Deep learning libraries and how we will work

Deep Learning Libraries

So, we have come with you to our main tool in creating neural networks - libraries.

What is a library? It is a set of functions that allow us to assemble and train a neural network without having to deeply understand the mathematical basis behind these processes.

More recently, there were no libraries yet, but now there is already a fairly good choice that greatly simplifies the life of the developer and saves his time.

We will not dwell on each library separately - this is rather a mini-review of existing solutions so that you can navigate them as a whole.

So, at present the most famous are the following libraries:

• TensorFlow (Google)

• CNTK (Microsoft)

• Theano (Montreal Institute for Learning Algorithms (MILA))

• Pytorch (Facebook)

• etc

At the center of the illustration is the Keras library - also developed by Google, and it is with it that we will be working the most.

We will also touch on TensorFlow and Pytorch a bit, but closer to the end of the tutorial.

In the hierarchy, it looks like this, starting from the lowest-level tools:

• The video card itself ( GPU )

• CUDA (hardware-software architecture for parallel computing, which allows you to significantly increase computing performance through the use of Nvidia graphics processors)

• cuDNN (a library containing GPU-optimized implementations of convolutional and recurrent networks, various activation functions, error backpropagation algorithm, etc., which allows you to train neural networks on the GPU several times faster than just CUDA)

• TensorFlow, CNTK, Theano (upgrades over cuDNN)

Keras, in turn, uses TensorFlow and some other libraries as a backend, allowing us to use ready-made code written specifically for creating neural networks.

Another important point to note is the question of how Python deals with all of this. Many people say that it works slowly.

Yes, this is true, but Keras does not generate Python code, Keras generates C ++ code, and it is it that is already used directly for the operation of the neural network, so everything works quickly.

How will we work

Now a few words about how we will work. We will write the code in the Google Colaboratory service.

They have a Python interpreter installed in the cloud, various machine learning libraries, plus we are allocated computing resources (GPU Tesla K80) that can be used to train neural networks.

Our educational process will be built around the Google Colaboratory (code demos, homework, etc.), and in general, it is very convenient, however, if you want, you can work in your own familiar environment.

It is recommended to run Google Colaboratory under Google Chrome, and the first time you try to open a laptop (this is the name of any document in Google Colaboratory), you will be prompted to install the Colaboratory application in the browser so that in the future it will automatically "pick up" files of this type.

Now, after installing Colaboratory, we can go directly to the practical part.

How to write a neural network in Python?

Writing code to recognize handwritten digits

So, we move on to the practical part with you to consolidate what we talked about above and see how to recognize handwritten digits from the MNIST set .

Just a few words about what MNIST is. This is a special dataset that collects a large number of images of handwritten numbers from 0 to 9.

Previously, it was actively used by the US Postal Service to recognize zip code digits, and now it is very often used specifically for demonstration purposes to show how simple neural networks work.

Google Colaboratory interface

The first thing we need to do is get a little familiar with the Google Colaboratory interface.

The first step is to connect to the computing resources provided by Google. To do this, in the upper right part of the window, click on Connect - connect to hosted runtime.

We are waiting for the connection and we see something like this:

This means that everything went well and you can move on.

The next step we go to the top menu and select Runtime - Change runtime type

In the laptop settings window that opens, select Python 3, and specify the GPU as the accelerator, i.e. graphics card and click Save .

And finally, the last moment. If we are working with a ready-made laptop and want to be able to edit it, we need to save a copy of it for ourselves, so we select “File – Save a copy to Drive…” in the top menu.

As a result of this action, a copy of this file will be saved to your Google Drive and will automatically open in the next tab.

We write the code for recognition

We are done with preliminary preparations and now we can go directly to the code.

We immediately draw your attention to the fact that in many things we will not dive very deeply, but we will focus on key points.

First of all, we include the necessary libraries:

In the first line, we import the pre-installed MNIST dataset with images of handwritten digits that we will recognize. Then we include the Sequential

libraries and Dense because We work with a fully connected feed-forward network.

Finally, we import additional libraries that allow us to work with data ( NumPy, Matplotlib, etc.).

Immediately pay attention to the modular structure of the laptop, thanks to which you can alternate blocks of code and text fields in any order.

In order to add a new code cell, text field or change their order, use the CODE, TEXT, and CELL buttons right below the main menu.

In order to add a comment directly to a cell, link to it, add a form or delete it, click on the three dots in the upper right part of any cell and you will see the corresponding menu.

And finally, to run the code snippet, we just need to click on the play icon at the top left of the code. It appears when a block with code is selected, or when the mouse hovers over empty square brackets if the block is not selected.

The next step, using the load_data function, is to load the data needed to train the network, where x_train_org and y_train_org are the training sample data, and x_test_org and y_test_org are the test sample data.

Their sample names make it clear that we use the training sample to train the network, while the test sample is used to check how well this training has taken place.

The meaning of the test sample is to check how accurately our network will work with data that it has not encountered before. This is the most important network evaluation metric.

x_train_org contains the images of the numbers themselves, on which the network is trained, and y_train_org contains the correct answers, which figure is shown in a particular image.

It is immediately important to note that the format for presenting the correct answers at the exit from the network is one hot encoding. This is a one-dimensional array (vector) that stores 10 digits - 0 or 1. At the same time, the position of the unit in this vector tells us about the correct answer.

For example, if the number in the picture is 0, then the vector will look like the first line in the picture below.

If the number is 2 in the image, then the unit in the vector will be in position 3 (as in line 2).

If the number is 9 in the image, then the unit in the vector will be in the last, tenth position.

This is because the numbering in arrays starts from zero by default.

To check if the data was loaded correctly, we can arbitrarily specify the number of the array element with numbers and see its contents:

After running this code, we will see the number 3.

Next, we perform a transformation of the data dimension in the training set of pictures - this is necessary for correct work with them in the future.

Initially, 60 thousand images of 28 by 28 pixels in size are fed to the network input in the training sample. There are 10,000 such images in the test sample.

Our task is to bring them to a one-dimensional array (vector), the dimension of which will be not 28 by 28, but 1 by 784, which is what we do in the code above.

Our next step is the so-called data normalization , their "alignment" in order to bring their values to the range from 0 to 1.

The fact is that before normalization, the image of each handwritten digit (all of them are presented in grayscale) is represented by numbers from 0 to 255, where 0 is black and 255 is white).

However, for the network to work effectively, we need to bring them to a different range, which we do by dividing each value by 255.

Now let's make sure that the answer array contains the correct answers.

To do this, we can run the code below and make sure that the array element 157 we specified above actually stores the answer that this is the number 3:

The next step is to convert the array of correct answers into the one hot encoding format we mentioned above.

This transformation is done by the to_categorical function , which transforms the digits from zero to nine into a vector of ten digits, so that our correct answer 3 will now be displayed in a different form:

The unit in the fourth position of this array just means the number 3.

Creating and training a neural network

This completes data collection and simple preprocessing. Next, we will need to create a neural network and set its architecture.

In our case, we will create a serial feedforward network, an example of which we considered the very first when we talked about types of architectures.

Next, we specify the layers that will be used in this network, create its architecture:

We add as an input a fully connected layer of 800 neurons, each of which receives all 784-pixel intensities (this is the dimension of the vector into which we converted the original square pictures) and use the ReLU activation function.

In this case, the number of neurons (800) has already been selected empirically. With this amount, the neural network learns best (of course, at the start of our research, we can try a variety of options, gradually coming to the optimal one).

As an output layer, we will have a fully connected layer of 10 neurons - according to the number of handwritten digits that we recognize.

Finally, the softmax function will transform a vector of 10 values into another vector in which each coordinate is represented by a real number in the interval [0,1] and the sum of these coordinates is 1.

This function is used in machine learning for classification problems when the number of classes is more than two, while the obtained coordinates are interpreted as probabilities that an object belongs to a certain class.

After that, we compile the network and display its architecture on the screen.

For error handling, we use the categorical_crossentropy function - it is better to use it when we have a classification of objects at the output, and the range of values is from 0 to 1.

As an optimizer, we use adam, which is similar in essence to the error backpropagation method, but with some inertia, and the metric for assessing the quality of network training is accuracy - accuracy.

After that, we start training the network using the fit method :

As parameters, we pass it the data of the training sample - the pictures themselves and the correct answers.

We also set values for:

• batch_size =200 - the number of images that are being processed within one iteration before changing the weights,

• epochs =20 - the number of repetitions of training cycles for the entire sample of 60 thousand pictures,

• verbose =1 - determines how much overhead information about the learning process we will see (see the picture below)

In the screenshot, we can see how training epochs are successively changing, each lasting more than 2 seconds, the number of processed images (60 thousand each), as well as the error values ( loss ) and image recognition accuracy ( acc ) at the end of each epoch.

Analysis of training results and recognition of data from the test set

We see that the error value at the end of 20 epochs was 0.0041, and the accuracy was 0.9987, very close to 100%.

Please note that we select batch_size and the number of epochs experimentally depending on the current task, network architecture, and input data.

When our network is trained, we can save it to our computer using the save method :

We first write the network to a file, then we can check that it is saved, and then we save the file to the computer using the download method.

Well, now it's time to test how our network recognizes handwritten numbers that it has not yet seen. To do this, we need a test set, which, along with the training set, is also included in the MNIST dataset.

First, let's select an arbitrary number from the test data set and display it on the screen:

Having seen what number the neural network should recognize, let's prepare for its recognition by changing the image dimension and normalizing it:

Then we start the recognition process itself using the predict method and look at the result:

We see the result in the format already familiar to us and understand that the number 2 was correctly recognized by the neural network.

If we want to normalize it, we can display the final answer in a more understandable way by using the argmax function, which converts the largest value (in our case, one) into the desired number, based on its position in the vector.

In the first case, we printed out the result of the predict method and got the value 2.

In the second code block, we directly printed the desired element from the y_test_org array by its index, getting the value 2. Thus, we conclude that the network recognized this image correctly.

How to research further

Now that we have some initial results, we can continue the exploration process by changing various parameters of our neural network.

For example, we can change the number of neurons in the input layer and reduce their number from 800, for example, to 10.

In order to retrain the network after the changes made from scratch, we need to rebuild the model, run the code with the changed network parameters and again compile it (otherwise the network will be retrained, which is not always required).

To do this, we simply click on play again in sequence in all three code blocks (see below).

As a result of these actions, you will see that the initial data has changed, and the network now has 2 layers of 10 neurons, instead of 800 and 10, which were previously.

After that, we can run the network to train again and get different results. For instance:

Here we see that over the same 20 epochs, the network was able to achieve an accuracy of 93.93%, which is significantly lower than in the previous case.

Continuing the study further, we can add another layer to the network architecture, let's say also from 10 neurons, and insert it between the existing layers. In this way, we will create a hidden layer and potentially improve the quality of the network:

We have added another layer of 10 neurons, which will be connected to the previous layer also of 10 neurons. Therefore, we set the value of input_dim to 10 (this is optional, the neural network will work correctly without a special indication of this number).

Now, based on the results of network training, we come to the following values:

We see accuracy of 93.95%, i.e. almost identical to the one in the previous version of the network (93.93%), so we can conclude that this change in architecture had almost no effect on the quality of recognition.

And finally, let's do another experiment, making sure that all 784 values come to one single neuron of the input layer:

We start training and look at the result.

As we can see, even with one neuron in the hidden layer, the network achieved an accuracy of almost 38%. It is clear that with such a result it is unlikely to find practical application, but we are doing this simply to understand how the results can vary when the architecture changes.

So, completing the analysis of the code, an important caveat should be made: the fact that we are looking at the accuracy on the training set is just an example for understanding how it works.

In fact, the quality of the network should be measured exclusively on a test sample with data that the neural network has not yet encountered before.

And now that we've experimented a bit, let's recap a little and see how to approach neural network experiments in a systematic way to get really statistically significant results.

So, we take one network model, and in a loop, we form 100 different training and test samples from the available data in the proportion of 80% - training sample, and 20% - test.

Then, on all this data, we conduct 100 pieces of training of the neural network from a random point (each time the network starts with random weights) and get some error on the test set - the average for these 100 pieces of training on this particular architecture (but with different combinations of training and test sets).

Then another architecture is taken and the same thing is done. Thus, we can check, for example, several dozen architecture options with 100 pieces of training each, as a result of which we will receive statistically significant results of our experiments and be able to choose the most accurate network.

Well, that concludes this review and we hope that it helped you better understand how neural networks work, and how you can quickly write your first neural network in Python.

Comments

Post a Comment